Dec 19, 2009

Published by Tony Primerano

Back in February 2003 we got about 2 feet of snow in Montgomery County Maryland. The snow plows completely ignored the side streets and focused on the primary roads.

The result? 3 days after the storm my street looked like this.

When the small plows finally came they were unable to deal with the heavy/wet snow so it took them a while to clear the streets.

I hope this doesn't happen again. It's not like I live in the middle of nowhere. 300 ft up my street is 7 Locks Road which was kept clear. Unfortunately, "up" is the operative word here and no one was able to escape before the plows came.

I'm still hoping FedEx makes it today, but the mailman got stuck a few hours ago so I'm not counting on it. :-( I did make the mailman's day by helping him get out.

Update

I'm happy to report that my street was plowed around 4PM on the 20th so we were snowed in for less than 48 hours.

Nov 4, 2009

Published by Tony Primerano

I've been using Rackspace's Cloud Servers for months now and I thought moving some of our standard PHP apps (like wordpress) to Cloud Sites would save me some time as a sysadmin/developer. I also figured I would setup a database there and use it for my Rails application that runs on Cloud servers (instead of building my own or using Amazon's RDS).

I'm sorry to say that after a few days of working with Cloud Sites, I think managing the sites myself on Cloud Servers would be easier. Fortunately, many of the problems I am having can be easily fixed.

My issues

1) No rsync or scp access. Installing a wordpress site via FTP or Rackspaces file manager was just plain painful. The file manager didn't allow me to move files and it always extracted zip files to the root directory. Maybe it works correctly on IE? I only run linux so I have no way of knowing.

2) No easy way to do backups. Cloud Sites allow you to run cron jobs but the example backups build tarballs on the same host that the site is running on. When I saw they had ruby as a cron shell option I assumed they had the CloudFiles gem installed, but they didn't. I want my backups off moved off the host. I don't want to log into my account and download them manually.

3) No access to database binary logs. I like to take a snapshot every 15 minutes or so in case I lose my database but this is not an option with the cloudsites database. You could do a mysql dump from another host but you probably don't want to do this every 15 minutes.

4) For my Cloud Server rails app there was no LAN address to access my Cloud Site database so all my queries incurred bandwidth charges.

Feature Requests (easy to hard)

1) Give people a cron job (or simple backup tab like cloud servers has) that can be used to dump their database daily to Cloud Files. Its a win for everyone. The user gets automated backup and you get more Cloud Files revenue. Here is a script I run from a Cloud Server to back up my Cloud Site database nightly (I can not run it as a Cloud Site job as the CloudFiles gem is not installed on the hosts.. as i mentioned above).

def run(command)

result = system(command)

raise("error, process exited with status #{$?.exitstatus}") unless result

end

cf = CloudFiles::Connection.new(@account_name, @cloud_key)

container = cf.container(@directory_name)

cmd = "mysqldump -C --opt -h #{@mysql_host} -u#{@mysql_user} "

cmd += " -p'#{@mysql_password}'" unless @mysql_password.nil?

cmd += " #{@mysql_database} | gzip > #{@backup_directory}#{@db_file}"

run(cmd)

t = container.create_object(@db_file)

t.load_from_filename "#{@backup_directory}#{@db_file}"

2) Advertise the LAN address of the Database Hosts... of course the address given now is probably a switch that hits several DB machines.

3) Fix the File Manager. Maybe it's just me but on Firefox/Linux moving files doesn't work. A crippled version of rsync may also be nice. I suspect there are security issues here but shell access sure would be nice.

4) This is probably hard but it would be nice if I could get access to the MySQL binary logs.

Thanks for listening. :-)

Sep 3, 2009

Published by Tony Primerano

Last October at BarCamp DC 2 I ran a session called “To Cloud or Not? AWS, EC2, S3 or build your own“. Unfortunately the barcamp wiki died and my notes are gone but at the time it seemed that everyone loved Amazon's services. I tried using EC2 in April and while the ablity to select from several pre-configured AMIs was nice, building your own AMI should have been easier. I wanted to configure my machine and then push a button to have my image created. With Amazon you needed to install tools and go through several steps to create an image.

Then I found Slicehost. It was owned by Rackspace and had servers for as little as $20/month (for a 256MB instance). A few weeks later I stumbled on Mosso, also owned by Rackspace and it had servers for about $11/month (plus bandwidth). Since my applications were using very little bandwidth, I moved to Mosso which is now called The Rackspace Cloud. With the Rackspace offerings you install your operating system image, configure it and then, from their control panel you can then back it up with 1 click. You can also schedule backups. This was so much easier than EC2.

Then there is the pricing. Amazon's small instance is a big vitrual machine and at $0.10/hour it runs around $70/month (i think it was 0.12/hour when I 1st started using it). This is probably a good price if you need that much horsepower. What could you possibly run from a 256MB instance anyway? Here's what I am running.

- A full rails app using Apache/Passenger and MySql (I had to remove several unused modules from apache config and my database is small at the moment)

- Apache PHP -- I don't have a database here but I suspect there is room

I suspect a 512MB instance a safe bet for most applications and I will lilely upgrade as my traffic and database size increases. Depending on the situation, I may just spin up more instances of the same server as redunancy is a good thing. Sure I could run everything on 1 AWS instance but if it dies I'm really SOL.

If you ever need a bigger slice you can upgrade in the control panel with 1 click. All your configurations and IP address are kept the same. I usually make a backup (1 click) before doing this just in case something bad happens.

Rackspace is still making improvements to their APIs and Image Management so while they don't offer as many services as Amazon, they have offered all important features to make developer's lives easier, IMO.

For the record, I actually backup my Rackspace Databases to Amazon's S3, I feel better knowing my backups are in a different datacenter. :-)

If you sign up (and found this post helpful) please use my referral code when creating your account. It is REF-TONYCODE

Feb 1, 2009

Published by Tony Primerano

Good wiki pages have good titles and the title is usually in the URL. This is all well and good but if you're creating pages on a corporate wiki that is hidden from the outside world, your title may be giving away too much information.

A few days ago on twitter one of my colleagues noticed this post on twitter.

JonGretar: I think AOL is using Erlang for it's chat system redesign. about 6 hours ago · Reply · View Tweet

His initial thought was that all our candid discussions about Erlang on twitter gave us away. But this wasn't the case.

JonGretar: @tumka Because of people viewing my erlang tutorial with referrer: wiki.office.aol.com/wiki/Open_Chat_Backend_Redesign about 5 hours ago · Reply · View Tweet

Ooops. Fortunately our Chat Backend Redesign is not a secret and our wiki page has links to all sorts of external sites.

For those non-techies out there let me explain what a referrer is.

When you click a link on a web page, the destination site is sent information on what page sent it the traffic. The source page is the referrer (or referer as it is misspelled in the HTTP spec). There are several useful applications that use the referrer information that I won't discuss here. Naturally, Wikipedia has a good article on the subject. http://en.wikipedia.org/wiki/Referer

What would have been worse is if we had a page called wiki.office.aol.com/wiki/Technology_Acquisitions_In_Process and someone at company X noticed this referrer in their access logs. If company X was a public company that person might run off and buy a pile of stock based on this simple observation.

Now most wikis are public and people wouldn't be creating pages like this in the public space. But more and more companies have internal wikis and it is becoming common to discuss things on these pages that should not be shared with the public. Rather than having to worry about your page titles giving away too much information I have created a mediawiki extension that will prevent referrer information from being sent to external sites.

Information on the extension can be found here.

http://www.mediawiki.org/wiki/Extension:HideReferringPage

Oct 20, 2008

Published by Tony Primerano

On Saturday, I spent the day at BarCampDC2. Like last year there were plenty of great sessions. I really wanted to discuss Amazon's EC2 as I think it is where most small companies should be moving their sites and I see huge business opportunities in this space. As the session board was being built I kept looking for something on Amazon's EC2, S3 or AWS. I had not used the services yet, but I was determined to discuss them as I know many of the companies present were using them. In desperation, I grabbed a pen and a post it note and wrote "To Cloud or Not? AWS, EC2, S3 or build your own". I owned the session and I hoped I would have a few experts in the room so I could act as a moderator instead of presenter.

The room was packed and I started out by telling everyone that I had no presentation or experience with these technologies and hoped we had some experts in the room. As I expected there were plenty of experts in the room and we had a great discussion on what Amazon had to offer and other offerings that companies can leverage.

The room was filled with some of the greatest minds from the DC Tech scene.

More pics are here.

More pics are here.

My notes from the session are here. Nikolas Coukouma helped clean them up and added some additional pointers.

After my session I attended several hard core geek sessions, as usual there were many sessions I was unable to attend. Maybe we can videotape the sessions next time?

I attended

- 11AM - Nitrogen Web Framework

- 1PM - Git

- 2PM - MySQL Optimization

- 3PM-5PM wandered through various sessions.

- 5PM - Beer! Great presentation by Chris Williams. I thought this was an early happy hour room but instead Chris schooled us on the history of beer.

Afterward we headed to McFadden's where I consumed several pints of Guinness. Fortunately I had taken the bus and metro from my house so getting home was a non-issue.

Thanks to Center for Digital Imaging Arts at Boston University for letting us have the conference there and thanks to all the folks that helped put this together. Can we do this again in 6 months? :-)

Aug 15, 2008

Published by Tony Primerano

A few months ago I was asked to do a Technology Due Diligence of SocialThing. My 1st reaction was why do we need another aggregator? We have BuddyFeed and several technologies that are proven to scale. People always snicker when AOL and technology are mentioned in the same sentence but the fact of the matter is that we've been building products that need to scale to millions of users, on day one, for well over 15 years. There are some amazing software engineers at AOL.

Back to SocialThing.... ;-)

As TechCrunch and others have noted, there are several social aggregators out there, but SocialThing found a powerful niche in the LifeStream aggregation market. Let me start by defining what a LifeStream is and then I'll get to what makes SocialThing unique.

LifeStreams focus on feeds about your life.

- What you are doing right now

- What pictures you recently uploaded

- What you posted to your blog.

Feeds on news and other events that are published by 3rd parties are not part of a LifeStream. They may still be feeds but they are not part of your life.

Life with a single social network

If everyone updated their status, uploaded pictures and blogged on a single social network LifeStreams would be easy. The standard Facebook News Feed would be all we needed to keep up to date with what our friend were up to. Of course, if there was a single network it would probably be pretty boring. Competition between social networks means we'll always have the latest and greatest features, and if we don't we'll eventually move to where the best features are.

The problem with this competition is that our online life is fragmented and our friends are in various places. If we want to keep up with everyone we need to sign into several services or find a way to aggregate information on the people we care about.

LifeStream Aggregators try to make this easy. I'll compare three of them here. These notes were made on my wiki a few months ago when I was hashing out what it was we were looking to buy.

FriendFeed

Many of the things our friends do are available via public feeds. This makes pulling them together in a meaningful way easy. FriendFeed makes this easy but you need to define what you want people to see in your feed (Me Feed) and then people can subscribe to your feed.

For example. I can quickly build a feed on FriendFeed that includes my Twitter, Pownce, Flickr and Blog feeds. Then my friends that use FriendFeed can subscribe to my feed to build a LifeStream of people they follow. FriendFeed makes this easy but its another account you need to create.

AIM has a buddy feed that does almost the same thing as FriendFeed. The AIM Buddy Feed feature is not well advertised but if you set it up your updates will show up in your friends buddy lists. Setup your feed here. If AOL promotes this feature and your friends are already on AIM this will save you from registering at yet another site. The aggregation of all LifeStreams of your buddies has been at dashboard.aim.com at times and then disappears. I hope it comes back soon.

Social Thing

SocialThing makes aggregation simpler than FriendFeed and AIM Buddy Feed because they took a different approach. Instead of requiring all your friends to join SocialThing they just pull your friend's feeds from the networks you already belong to. They also let you post messages to your various networks.

They can do this because they ask you for your name and password on these sites. Personally I find this scary. Some sites like Facebook can give 3rd party sites tokens that they can store and use to access your account so the password is never sent to the 3rd party site. But sites like Twitter do not have this capability so sites like SocialThing need to save your username and password.

What do you get for giving up your usernames and passwords? Power!

Some services like Twitter have APIs to fetch your aggregated LifeStream in a single call. This makes SocialThing's job easy. Other APIs require a 2 step process to get the aggregated LifeStream.

- Step 1 -- Fetch my friends

- Step 2 -- Fetch my friend's "Me" Feeds.

This second scenario presents a scaling nightmare. If I login to SocialThing and I have 5 networks that require a 2 step process and I have approximately 50 friends in each, they need to make (5 + 50*5) 255 calls anytime I visit. They then need to keep polling these services to keep them up to date. This is a lot of work for SocialThing to be doing but it is also beating up on my 5 networks. As SocialThing grows its user base they might find their IP Address block as they overload the sites they are polling to build LifeStreams.

Another nice feature that you get by giving SocialThing your password is the ability to send messages to your various networks from SocialThing. With 1 click you can update your status on Twitter, Facebook and Pownce.

What's next?

Honestly, I don't know. :-) At this point the product folks are in control. We have an opportunity to make a great product even better and to bring our AOL, AIM and Bebo users into the world of LifeStream aggregation.

May 16, 2008

Published by Tony Primerano

Two weeks ago I went to Omaha to attend the Berkshire Hathaway shareholders meeting. The meeting was great and Omaha was fun to explore, but 2 businesses left a mark on the entire trip.

1) As I checked out of my hotel at 4AM I noticed the charges were not consistent with my reservation. They were higher, I called the office when I arrived home and they fixed the charge.

2) Today as I looked at my credit card statement I noticed my rental car bill was about 70% higher than the estimated charge. I assumed they dropped a discount but when I called them, they said there was a $71 fuel surcharge (inc. tax). The needle was past full when I dropped the car off. If they tried hard they might have been able to squeeze a 1/2 gallon into the tank. They have now removed the charge.

Since these companies fixed the charges I'm not going to name them but I wonder if this is common on the shareholder's weekend. I suspect less than 50% of consumers would have noticed the discrepancy resulting in more profit for these companies. But as Warren Buffet would say "we never want to trade reputation for money".

Post a comment if you had a similar experience in Omaha.

Apr 16, 2008

Published by Tony Primerano

Handles are the key to having applications update on the profile page without a user taking action. Some notes on how to do this on Facebook are here. Since Bebo has implemented most of the Facebook API this should be easy but I think some things (like Infinite session keys) are still missing. Hoping someone will leave me pointer proving me wrong on this. ;-)

By placing a handle on a user's page you can (in theory) push updates to a user's profile page without them interacting with the application. For example. If you had a "Washington Capitals Fans" application that pushed news to every user's profile that had the application, all these pages would have the same handle. Having a single handle means that a single update of the handle content would update all profiles using the application.

In other cases you might want a unique handle per profile. For example, if your application injected an RSS feed of the user's choice onto their profile.

Here is a simple example of setting a handle on a user's page. I use the user's UID as the handle in this case.

Set a handle on a user's profile

I then write an update.php file that injects the date on the user's profile

Update a handle on a user's profile

That's all fine and good but the update wasn't automatic. The user had to hit the update.php file. In a real application we would have a database of all the users and we could periodically update the handles. Assuming there is an infinite session concept in the Bebo API. Since I could not figure out how to implement an infinite session here is my hack.

I place a hidden image on the user's page that calls the update function.

Now when a user views their profile, the hidden image calls refresh.php and the handle content is updated. Unfortunately, the update happens after the page is rendered so you'll need to reload to see the change.

The code...

Set a handle on a user's profile and use hidden image for update

Unfortunately, the update happens after the page is rendered so you'll need to reload the page to see the change. It should also be noted that anytime someone views your page they will call the update.php. If they also have the application installed your handle will be updated.

Assuming infinite sessions keys are unavailable I would leverage app users to update their peers. In your database of users keep track of the last time they were updated. Anytime the update.php function is called do an update on all handles that haven't been updated in a specific period of time. As the user base grows this should allow for a more even distribution of updates. This might even be better than the cron jobs that infinite session key users use in their cron jobs.

Apr 15, 2008

Published by Tony Primerano

Last week I spent some time learning how to build Bebo applications. Their API is just like the Facebook API but it is not complete and the documentation has some holes in it. If you haven't built a Facebook application the entire process can seem awkward.

The Bebo Developer Page makes it sound like a simple 3 step process

- Get the library

- Install the app

- Start Creating.

If you don't know how to write web applications in PHP, Java or Ruby stop now. You need to know at least one of these systems to continue.

I downloaded the PHP library and put it on my host. Next step was to install the developer application. Why? Why do I need to install an app to build apps? Seems weird at 1st but basically this application is used to manage the keys, locations and settings for your apps.

Once you have the app launch it and click "Create new app" (way off on the right) . Give your application a name and a URL and a callback URL.

What is the callback URL? It is the URL that is hit when a user goes to the Application URL. http://apps.bebo.com/yourappname is just a proxy that adds headers and parameters to the request before it hits your application file. The bebo library uses this information.

For example. I created an app called sample with a URL of http://apps.bebo.com/sample. My callback URL is http://bebo.tonycode.com/apps/sample

Hitting http://apps.bebo.com/sample results in a call to http://bebo.tonycode.com/apps/sample where I have the following index.php

Display_All_My_Friends

This application simply prints out all your friends.

Add the application to your page by going to the developer application, viewing the app and clicking the profile page for the app. Now go to your profile page a voila.. the app is there but not your friends.

Huh? How do I get the content to show up on my profile page? If I don't show it on the profile page it seems pretty pointless. right?

To add content to the profile page you use profile_setFBML. Last I checked the return codes for this function are not defined. Seems to return a 1 when it works and an array when it fails. Nice. :-\

Here is the code updated to put friends on profile too.

Display_All_My_Friends_on_my_Profile

Weeks go by and you add new friends to your profile and you notice that the list of friends in your custom application aren't changing. profile_setFBML is only called when you visit the application page. Well that stinks you say. How can I make it update each time I visit the profile? I'll get to that in my next post.

Random Tips.

- Documentation is at http://developer.bebo.com/documentation.html

- The downloaded libraries have example code. Start there

- You may need to look at 2 different sections to piece together how a function works. For example user.getInfo has a fields parameter

- http://www.bebo.com/docs/api/UsersGetInfo.jsp

- Look at the SNQL users table to find what is available

- http://www.bebo.com/docs/snql/User.jsp

Mar 24, 2008

Published by Tony Primerano

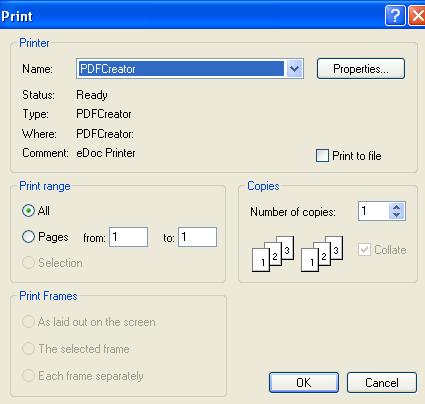

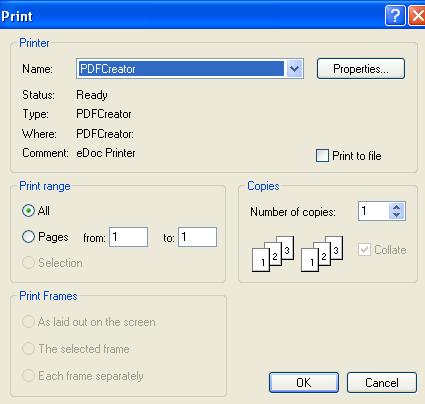

I always hated printing confirmation pages from web sites. Printing a piece of paper that'll end up in the recycle bin in a few weeks is just silly. There are also times that I'm not connected to a printer and I really need to save a copy of something I'm viewing.

My answer for the past few years has been PDFCreator, install it and whenever you print, you can print to a pdf file instead of a printer. This month alone I think I've printed one paper page from my printer and about 90 pages to PDF form.

I also used PDF creator to email proof pages for our daughter's yearbook to teachers. In the past printouts would be made for each teacher, they would mark them up and send them back. I've easily saved a ream of paper this year by doing this via email. Not to mention its easier to manage email than paper.

Once installed, just use it like a printer. It will show up as a printer in the print dialog.

From there it will ask you for a title and then a name for your pdf. If you want to make a hardcopy later you can just print the pdf file you created.

More pics are

More pics are